🔤 AI makes you dumber (unless…)

Unless you do this one thing to upgrade how you use it

Hey there! I’m Robert. Welcome to a free edition of my newsletter. Every week, I share my story of building my dreams in public with bootstrapping a startup in AI, Alignment, and Longevity. These newsletters include my reflections on the journey, and topics such as growth, leadership, communication, product, and more. Subscribe today to become the person and leader that people love, respect, and follow.

We’re turning to AI for life guidance.

The most common use of AI in 2025 isn’t search or coding—it’s emotional support.

Yet most AI has no clue how to actually support you.

Let me show you why that matters—and what I’m doing about it.

May 2025

Our family dog Bean became paralyzed.

I found that Bean—whom I love deeply—had a tumor growing on the side of her head.

This had been going on for a while, and I had no knowledge of it.

I pay for her care. Her vet bills. Her insurance.

But no one told me. Not a word.

I found out later, by accident, when petting her when I visited.

And when I did, I was livid.

Not because of the money.

But because it felt like neglect.

I asked: “Why didn’t you take her to the vet?”

Crickets.

Avoidance. A betrayal of care.

I couldn’t believe it.

I work this hard every day, every week, and y’all can’t just get off the couch and take her to the vet?

The labs came back benign, but that wasn’t the end of it.

Her health hasn’t been good because her diet and exercise has been poor.

And then she ended up paralyzed shortly after, from the lower legs down. PARALYZED.

I shed so many tears.

She’s getting better as time goes on, but her life won’t be the same. She’s still pretty happy though—dogs are so impressive in their resilience.

Late last year I lost my pup Nibbler. And now this was happening in front of my eyes.

It opened up a wound.

A familiar one, built over years of quiet tension, cultural silence, and unspoken expectations.

I wanted to set a boundary with my family financially and emotionally.

But I also didn’t want to abandon them.

I love them. I want to see them do well.

How do you hold both of those truths at once?

So I did what many of us do now when trying to navigate the complexities of life.

I turned to AI for life guidance.

🔤 This Week’s ABC

Advice: AI makes you dumber

Breakthrough: Maintain agency while using AI

Challenge: Challenge your beliefs

📖 Advice: AI Makes You Dumber (Without Context and Intentionality)

Okay, I turned to my therapist first many times, and THEN I turned to AI—calm down folks.

But when we look at the data…

The most popular use case for AI in 2025?

Therapy & Companionship.

Let that sink in.

We are not just asking these machines to solve problems.

We are asking them to care.

Or, at least pretend to.

To coach us in our life dilemmas in my case.

But here’s the disconnect:

Most AI today still doesn’t understand context, nuance, or lived experience. It can mimic empathy without embodying it.

It can say the right things.

But does it know why those things matter?

Is it just going to be that “Yes” friend who always agrees with you, or will it challenge your assumptions and help you evolve your own truths?

Will it help you become who you want to become?

Or is this just another form of hyper-biasing like social media, but even deeper?

I am pretty obsessed with my own self-growth, so I ask myself:

What am I becoming, as I use AI?

People that know me know that I really value agency and autonomy. I try to create content, but I don't consume it mindlessly.

I consume it intentionally.

I like Thich Nhat Hanh's perspective that everything around us can be food.

We feed our brains different things and they shape us.

With what I’ve learned from neuroscience, I know that to be true. You are what you do.

What you do includes what you feed yourself—information, people around you, your environment, your real food, etc.

Whatever we feed ourselves is the model that we evolve.

So, let’s ponder this—what if we keep feeding ourselves the AI dopamine drip?

If I always offload my thinking and reconciliation of issues to AI—what does that make me?

Apparently, it gets me closer to becoming a toddler again.

Shit.

“A study by Michael Gerlich at SBS Swiss Business School has found that increased reliance on artificial intelligence (AI) tools is linked to diminished critical thinking abilities. It points to cognitive offloading as a primary driver of the decline.”

I don’t know about you, but personally I want to keep improving my critical thinking abilities over time.

Or at the very least into old age, I want to keep what I have. I don’t want to see it diminish.

But on the other hand, I do want to take advantage of these tools to see how I can improve critical thinking abilities AND become the better version of myself.

I see critical thinking as key to true agency.

The question then becomes:

How can I maintain agency while using AI to navigate life?

The answer:

Context.

(And intentionality—you need to learn when to offload critical reasoning skills or not, and how you best use the tools but that’s for another article.)

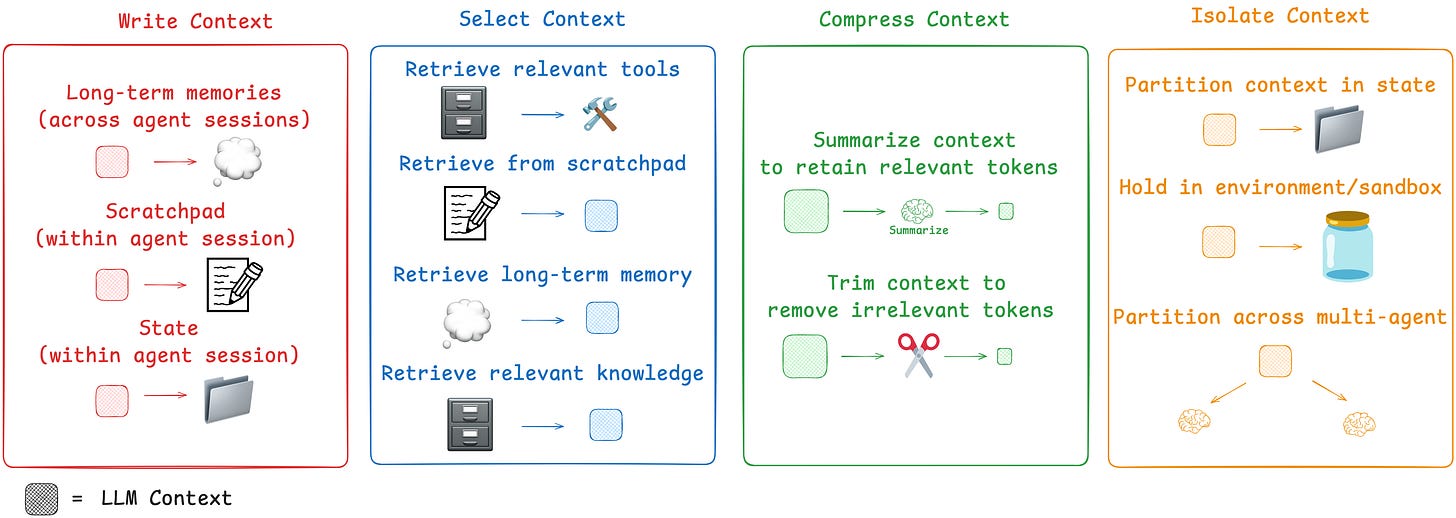

It’s the new hot thing—Context Engineering.

How can I give AI enough context of myself, to help it, help me, better?

It’s actually quite a tough challenge which bridges technology and the humanities.

Let’s dive in.

🚀 Breakthrough: Solving My Own Problems

First of all, I don’t want generic advice from any AI.

That’s not the way the world is going.

The way the world is going, is towards hyper-personalization.

Specifically, I wanted to talk to AI that helped me through my family dynamics in a personalized way—not a generic way.

This is something I’ve thought very deeply about.

When You Have Problems

Think about it… When you have a problem you’re struggling with?

What’s a healthy thing to do?

Tell a friend or family member.

Find support.

Everybody could relate to bringing that problem to a member or friend, and within a couple of conversation turns, you feel seen and heard nearly immediately.

And everybody could also relate to bringing it to a family member or friend where, no matter how many conversation turns or infinite conversation turns and dialogue, you NEVER feel seen or heard or understood.

I’ve certainly found this in my life.

Mirroring the AI example, it’s because the former people remember you. They remember your context. They use language and active listening skills that help you feel seen and understood.

The latter, don’t do any or too little of all the above.

The former is great, the latter is not so much.

From a life experience perspective, I would like to mirror that experience for an AI—help the user feel seen, heard, and understood in as little conversation turns as possible.

In great Product work, we often think about minimizing time to value (TTV).

Intersecting this with the human experience in this very subjective space of life guidance, the subjectivity of perceptions and experiences must be the first problem to solve.

What does it mean to “feel seen and heard”?

That’s a problem at the n=1, individual person level.

High level—that’s how I think of hyper-personalization.

Minimize time to feeling seen and heard.

Or…

Minimize conversation turns for the user to feel seen and heard when looking for life guidance.

Product and design

I like solving things completely, so from first principles…

We need a robust model and representation of me (who am I? what do I care about?) so that AI can best understand me. A self-model that reflects who you are becoming, not just who you've been.

We need a way for me to co-evolve that model and representation of me, seamlessly, and keep it up to date with my life experiences. A system that adapts with your emotions, memory, history, and context.

We need to be able to handle subjective cases—how impactful is one experience to one person versus another person? We need feedback loops that reinforce your agency, not replace it.

We need AI that becomes more meaningful over time, because you become more meaningful to it. It has more context and learnings of you.

It should be responsive AND relational.

It’s not just a co-pilot.

It is a living a mirror that reflects the beliefs you hold—the subjective YOUness that make you, YOU.

And it allows you to co-evolve that mirror.

That’s exactly what I’ve been building with my open source project and startup, Epistemic Me.

Recently, I’ve been facing this tough and complex family dynamic problem: how do I set better boundaries with my family?

After a few therapy sessions around it, I still wanted to explore my beliefs and patterns at a deeper level to get hyper-personalized guidance.

With my own burning complex family dynamic problems, I set out to design and build something that would help me.

So I built an app I’m calling Clarity, bootstrapped my own Self Model with my own SDK, and got the answers I wanted to navigate the situation and my life.

Feeling uncertain? Get Clarity

Feeling anxious? Get Clarity

Feeling insecure? Get Clarity

Here’s how it works:

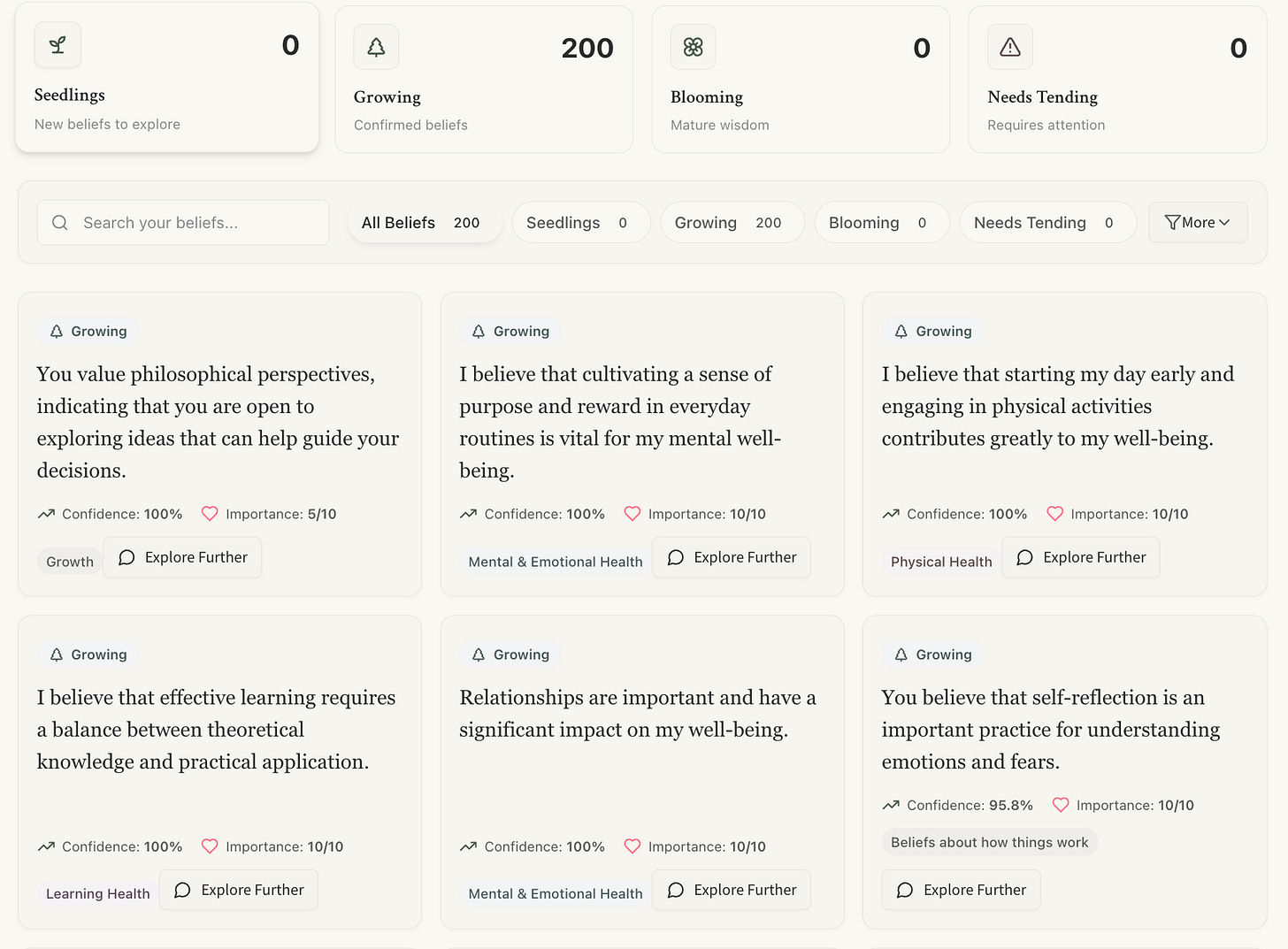

Self Model: It builds and updates a living model of me—what I believe, what I care about, who I want to become.

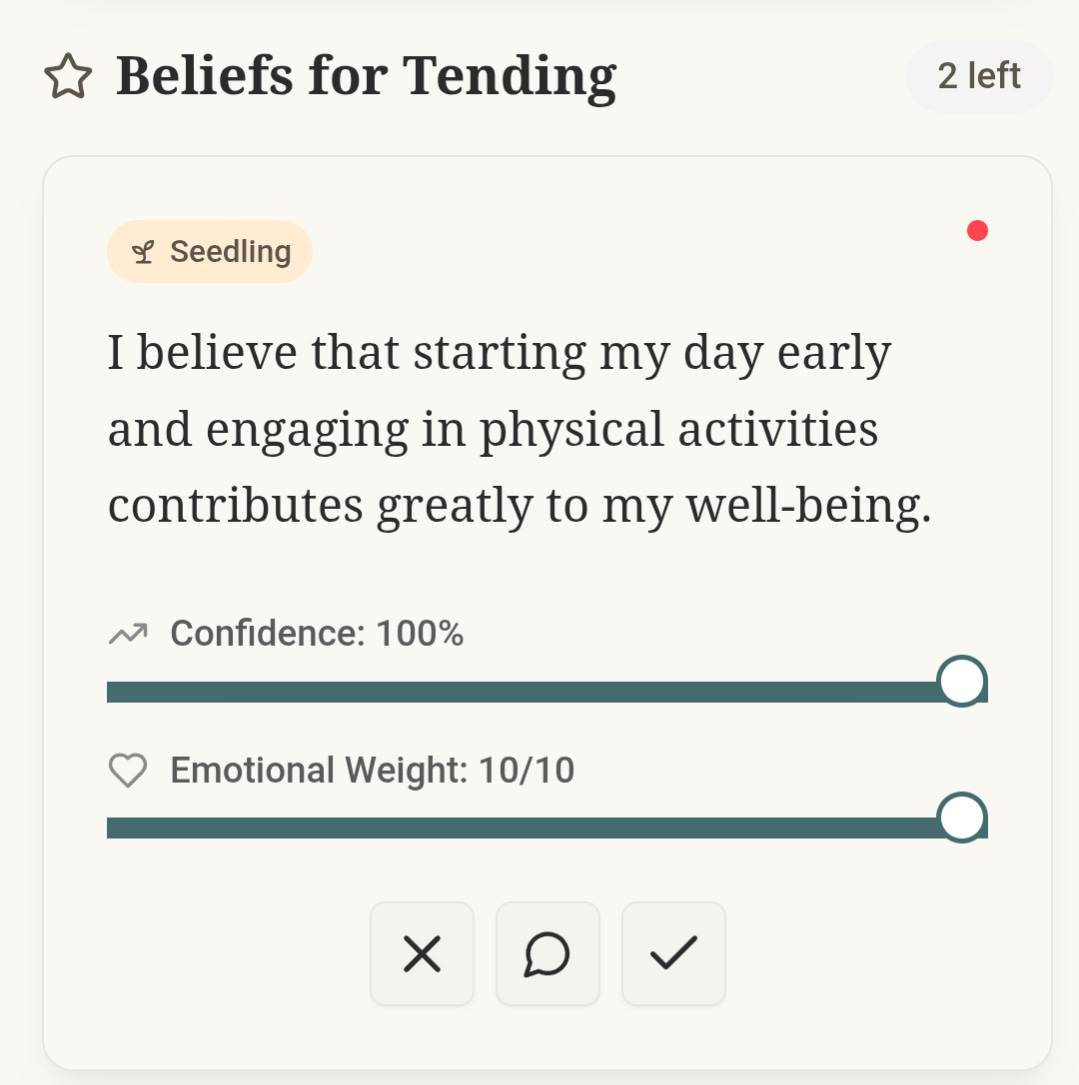

Belief Garden: My beliefs are tracked like plants—seedlings, rooted truths, ideas that need tending.

Reflective Dialogue: When I ask Clarity about something hard—like my family dynamics—it pulls from that self-model to give truly personalized guidance, reflecting my beliefs.

I started with a UX where I can tend to my Belief Garden daily—beliefs that start as seedling beliefs that are detected from conversations with Clarity.

Then after a bunch of talking to Clarity, I brought up the issue I’m facing.

Take a look at how my beliefs and personal context are reflected in the dialogue about setting boundaries with them.

Clarity accesses my Belief Garden, and who I want to become (my Target Self), and then personalizes a response to me.

I really felt heard and seen when reading these phrases in particular, mirroring my own beliefs:

“It’s commendable that you’re actively seeking strategies to protect your own well-being, as you firmly believe that cultivating a sense of purpose in your routines is essential for your mental health.”

“Frame it positively, by stating that it will allow you to focus on your own health, reflecting your belief that maintaining good health requires conscious choices.”

“Ground yourself in your belief that feeling anxious doesn’t define your worth or ability to succeed.”

Much more fine tuning of prompts and AI evals to do, but I’m using it everyday for problems around navigating uncertainty and more.

It isn’t perfect (yet), but it is a hell of a lot better than generic AI responses where I have to retrain ChatGPT, Claude, or Perplexity on my context when the memory in the chat window fizzles out.

This one’s a bit personal so I won’t share more than that, but here’s another screenshot of the Belief Garden so you can get a sense of what other context Clarity has on me and how I co-evolve that.

And here’s a deep dive into a belief, where I can see how aligned this belief is to my Target Self, and explore any tensions the system detects with the belief from my interactions.

I’ve come to find that solving hyper-personalization is really hard.

It is hard because it not only requires the Technical, Product, and Design chops…

It requires a nuanced understanding of Neuroscience, and cross-disciplinary expertise in Philosophy (and particularly in Epistemology—what is knowledge? What is truth?).

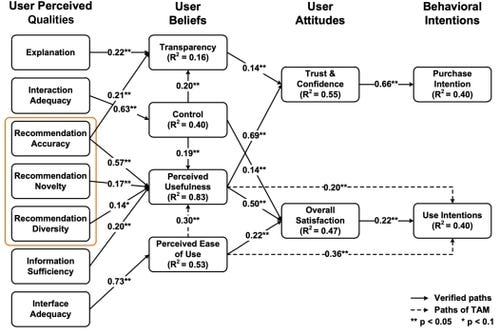

And to give you an understanding of the inherent complexity of implementing and designing a Belief System Modeling engine, here’s what that may look like from a Machine Learning lens to objectively calculate subjectivity for users and their beliefs to inform a recommendation engine:

It’s a hard challenge!

That’s what we’ve done here with Epistemic Me—make it easy for people to hyper-personalize their AI/LLM apps by modeling and evolving context with the people behind the user accounts.

That’s how Clarity knows about me at a deeper level.

And eventually, going through this process with Clarity helped me reframe and understand better about myself:

→ Maybe this wasn't just about Bean.

→ Maybe this was about feeling unseen.

→ About needing to be the caretaker... again.

→ About the invisible cost of emotional labor.

→ About feeling so utterly alone in the family space.

And that was what ultimately helped me feel conviction in setting better boundaries with my family.

I truly believe that the world needs Aligned AI, and to do that, AI must understand and model you.

All the nuances of you, not just the surface level things.

That way it can truly align to you.

Because—how can AI understand us, if we don’t fully understand ourselves?

As I keep using these tools to become a better version of myself while maintaining high agency, I’ll keep sharing my journey here.

And we’re all on this journey together into the future, so I’ll continue sharing in public how I’m solving these very human problems we all face.

💥 Challenge: Question How You AI

Think through how you use AI.

Are you offloading too much?

Are you decreasing your critical reasoning skills?

Think it through, and adjust accordingly.

→ Reach out if you’re interested in your own Model of Self!

Note: remember to give it as much context about you as possible. In particular, I like the instruction not to be a yes-man for you because of AI sycophancy which is popping up as a problem.

Liked this article?

💚 Click the like button.

Feedback or addition?

💬 Add a comment.

Know someone that would find this helpful?

🔁 Share this post.

P.S. If you haven’t already checked out my other newsletter, ABCs for Building The Future, where I reflect on my founder’s journey building a venture in the open. Check out my learnings on product, leadership, entrepreneurship, and more—in real time!

P.S.S. Want reminders on entrepreneurship, growth, leadership, empathy, and product?

Follow me on..